What if there was another way to deliver the computing power of a "scale up" machine using low-cost hardware common to a "scale out" architecture? What if there was a way to make a server as big or as small as it needs to be…on the fly? There is, and it's called the TidalScale Software Defined Server.

"Scale up" versus "Scale out"

Your enterprise generates vast amounts of data; much of it extremely time sensitive. Whether the application calls for the real-time monitoring of production equipment, forecasting raw material demands, tracking stock trades, or deciphering genomes, speed and capacity for mission-critical apps are of the essence. Typically, in-memory analytics and/or database solutions are required to meet these demands.

For example, one well-known in-memory solution, SAP HANA, requires specialized machines and huge amounts of memory to process the terabytes of information created by those data intensive applications. These compute assets, such as IBM Power or HPE Superdome servers, are examples of "scale up" systems employing dozens or even hundreds of cores with memory capacities of up to 48 terabytes packed into a single machine, and they are extremely expensive. Since most of the applications running on these servers are mission-critical, that means enterprises need three identical systems; one for production, one for DR, and one for Test/Dev; culminating in a multi-million dollar infrastructure investment.

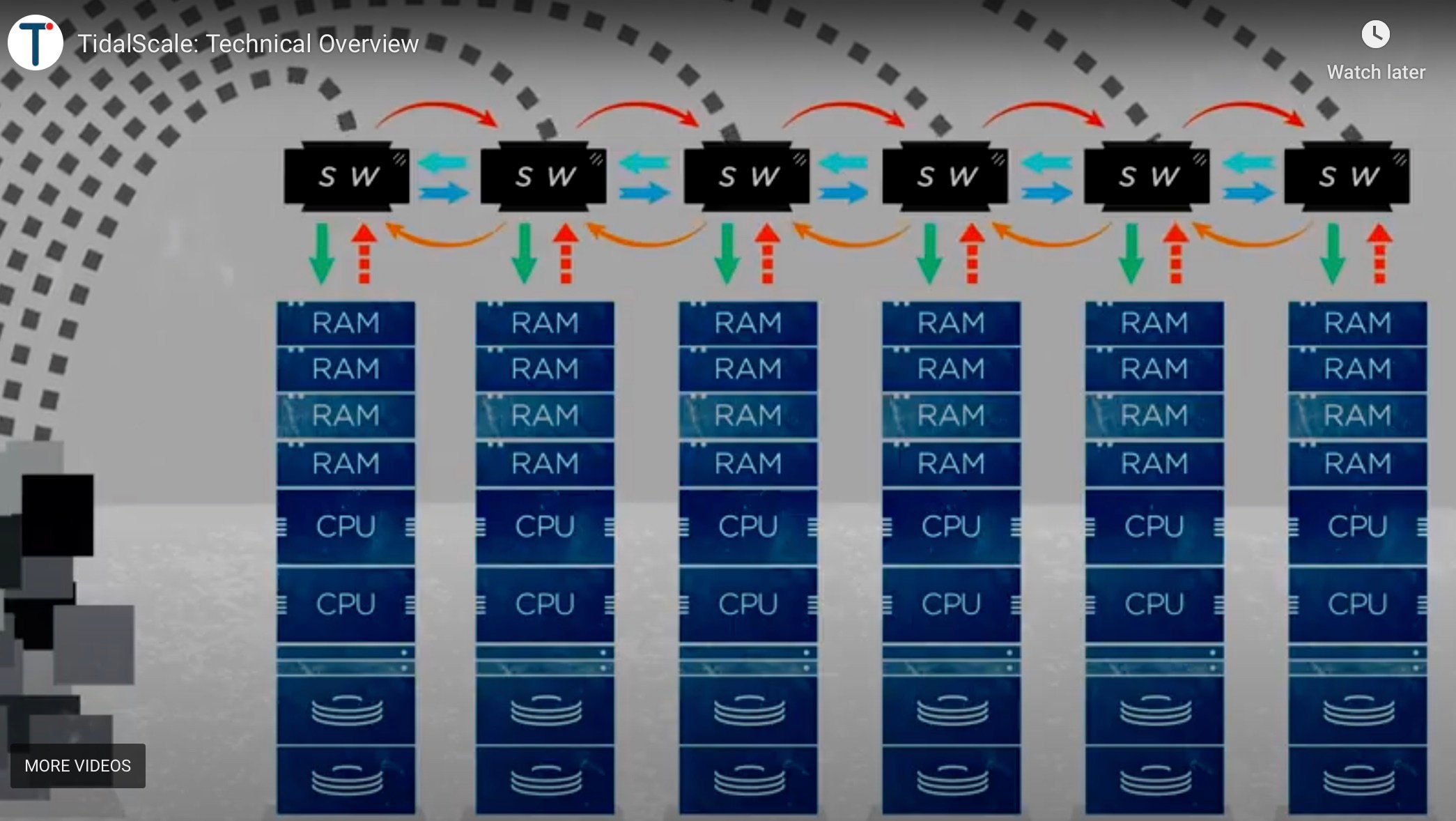

The alternative "scale out" architecture, used by most public clouds, amplifies the power of its compute assets by virtualizing lots of x86-class processors to manage large numbers of workloads. Upon reaching maximum capacity, a cloud provider simply adds more hardware to manage the additional workloads. This model works well when serving a multitude of applications with small in-memory data needs, but is not appropriate for "scale up" workloads that focus on a single task with massive in-memory requirements. The distribution of workloads, data, and the reassembly of computations performed by individual servers requires too much heavy lifting for timely analysis (Figure 1).

The Software-Defined Server changes the way servers are perceived

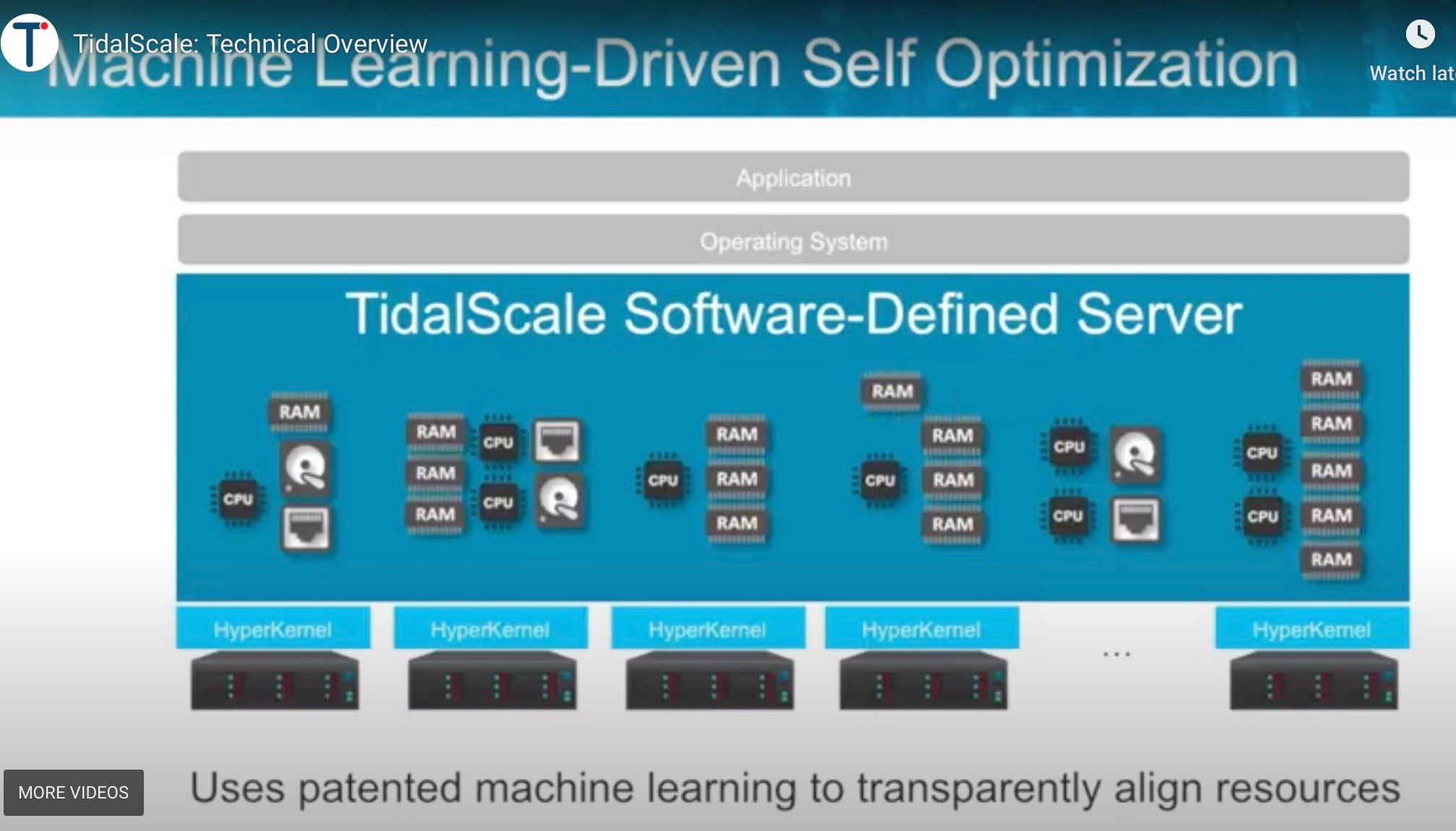

With TidalScale's inverse hypervisor software technology, administrators can compose a single virtual server from a cluster of commodity servers with the computing resources equal to a "scale up" machine. And it does this using standard off-the-shelf x86 hardware running these workloads on an unmodified Linux operating system. (Windows Server support coming in the Spring of 2021). TidalScale resides between the hardware and operating system layers, allowing administrators to quickly provision as many resources as the application demands from a pool of commodity hardware. Then, instead of using the divide and conquer strategy of the "scale out" model, it presents all the assets as a single machine with a single OS to the application.

Take, for example, a dozen HPE ProLiant DL360 Gen 10 servers, each with 32 cores and one-half terabyte (512 GB) of memory. By themselves, they could not handle big data processing problems. With TidalScale, these assets can be composed, through software, into one "scale up" machine with 384 cores and six terabytes (6TB) of linear memory to efficiently run the entire workload. Scalability is virtually unlimited; you'll never run out of processing or memory assets again. Need more power? Simply merge more servers into the software-defined server from the cluster pool. Cores and memory within the cluster are transparently moved and re-allocated in microseconds to fit the application and optimize performance (Figure 2).

In fact, a TidalScale software-defined server has been shown to outperform "scale up" machines. Through its built-in machine learning (ML) technology to monitor and provide feedback on running workloads, it learns how to best use cores and memory pages to optimize workloads to continually improve performance.

The value proposition for TidalScale is simple: it allows enterprises to manage and squeeze the most value from their data by combining fleets of low-cost computing assets into one unified platform that can be used to handle the most data-intensive, time-sensitive, mission-critical applications.

TidalScale saves money by linking the power of multiple inexpensive commodity servers to do the job of a multi-million dollar "scale up" server. It saves time by compressing deployment from months or even years to just a few weeks. It improves flexibility by allowing administrators to freely add, move, or reassign servers in the cluster. It beefs up your overall infrastructure by allowing groups of machines to be used for production, disaster recovery, and testing and development, and to redeploy them as needed. TidalScale does all this through its HTML 5 based UI, remotely if required, without the need to modify source applications and operating systems.

As an early partner and adopter of TidalScale software-defined server technology, IIS has the expertise to help you scale up and compose multiple servers into a single entity. Eliminate the configuration headaches and huge investment usually required for heavy analytics or database workloads like SAP HANA, Oracle Exadata, Sybase, KDB, or Apache Spark environments with TidalScale.

Zero risk trial

Have a few old 2U x86-class servers collecting dust in a closet? Give IIS a call or visit us at iistech.com and let us build a proof of concept at no cost to show you how we can optimize your architecture using existing assets to meet your "scale up" workloads.

.png?width=715&name=IIS%20Featured%20Image%20Template%20%20(8).png)